Hello, everyone! I know it’s been a while! My apologies. As mentioned in a previous post, I’ve been dabbling in science education on top of finishing my M.S. in Chemistry, so I’ve had my hands full. However, given that I (along with most of my readers, certainly) am under quarantine due to COVID-19, I’ve had a little time to revisit this blog. Expect a post on immunology to queue up shortly—this one is about the Major Histocompatibility Complex!—but first, let’s take a detour and learn about oxygen transport in your body. That’s right! It’s time to learn about hemoglobin and myoglobin.

Oxygen is, as I’m sure you’ve learned by now, quite important to our existence. We use it as a final electron receptor in respiration, which means we need it to, you know, make more than two ATP per glucose molecule. However, oxygen doesn’t exactly like to just sit around in our blood. In fact, if oxygen had its way, there would only be 0.3 mL of it in each deciliter of blood.

That’s where hemoglobin comes in. With its four heme groups, it binds molecular oxygen and transports it throughout our blood rather efficiently. Thanks to hemoglobin, we can count on there being about 20 mL of oxygen per deciliter of blood under saturating conditions. A useful protein, wouldn’t you agree?

So, how does this oxygen transporter work? Well, it turns out, that’s a bit of a complicated question right off the bat. Thankfully, hemoglobin has a much less complicated cousin called myoglobin.

Myoglobin is a monomeric (one-unit) oxygen-carrying protein that’s found in muscles. Because it’s a monomer, its structure is significantly easier to study than hemoglobin’s. If you’re wondering why I choose to take a detour to talk about a seemingly-unrelated protein, here’s the reason: the myoglobin monomer looks ridiculously similar to one hemoglobin’s four subunits. (In fact, my undergraduate biochemistry professor claimed he would award an immediate A to anyone who could distinguish the two based upon visual inspection alone.) As you would expect, this means that myoglobin works very similarly to hemoglobin. That makes sense, as it earns its keep by providing muscles with oxygen when hemoglobin is falling down on the job, which happens essentially any time the cell is respiring. (Muscle cells are demanding little things.)

The strictly protein part of myoglobin, without the heme, is referred to as “apo-myoglobin,” and is composed of eight alpha helices in an “all alpha” structure. These eight helices make a nice little pocket in which a heme, which contains iron (II), can situate itself. The Fe2+ is coordinated with the nitrogens from four nearby pyrrole groups as well as a histidine residue, the proximal histidine. In the vicinity there is also a distal histidine, which is more distant to the heme.

(If you’re having difficulty visualizing this, don’t worry. There’s an illustration below.)

In this complicated arrangement, the heme stabilizes the protein because the heme, like the interior of the protein, is hydrophobic. The additional hydrophobic groups help keep the hydrophobic core of the protein happy.

Why does the structural layout of the protein around the heme matter? Well, it turns out that this very precise arrangement affords the myoglobin the ability to bind oxygen in a very interesting way. As inorganic chemists know, Fe2+ likes to have six ligands, or six things bound to it. However, in deoxy-Mb, it only has five—the four surrounding pyrroles, and the proximal histidine. Therefore, it would prefer to bind something like oxygen in the binding site opposite the proximal histidine. However, the distal histidine, the one that’s just kind of hanging out there above the binding site, is just close enough that oxygen can’t bind to the iron straight-on, but instead has to bind at an angle. This is important, as it prevents the very tight binding of poisonous carbon monoxide, which prefers to bind in a linear conformation.

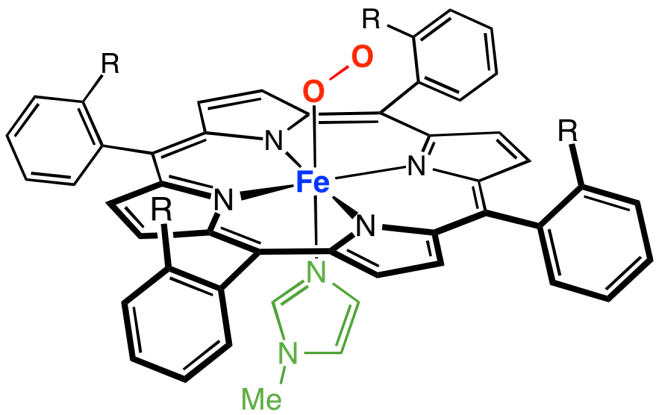

Here’s an image of the whole setup (courtesy of Smokefoot). The green bit at the bottom is the proximal histidine, and the distal histidine isn’t shown. (Notice the four coordinated nitrogens in the plane of the heme and the coordinated nitrogen from the proximal histidine underneath.)

Another relevant feature of this setup is that in deoxy-Mb, the proximal histidine pulls the Fe2+ out of the plane of the heme by about an Angstrom, or about the distance of an O-H bond. When oxygen binds the Fe2+, it pulls it back into the plane. The iron pulls on the proximal histidine, which, in turn, pulls on the helix that it’s attached to, and ultimately the conformation of the whole protein changes.

Neat, right?

So, how would we describe the way oxygen binds to myoglobin? Well, you can thank a few chemists who liked math, because they figure that out with an equation. [uproarious whooping in the background, I’m sure]

It’s not too difficult to imagine that deoxy-Mb is in equilibrium with oxy-Mb. If you define Y as the fraction of Mb bound with oxygen, Y is equal to the partial pressure of oxygen (essentially, the concentration of oxygen) divided by the sum of the partial pressure of oxygen and the partial pressure of oxygen required to saturate 50% of the myoglobin, which, in this case, is 2 Torr. Yeehaw, right? I know. Here’s an easier way to look at it:

Y = pO2 / [pO2 + P50]

Quick, before I say anything else! What kind of curve is that?

Exactly! It’s a hyperbolic curve!

What that means, practically, is that, when pO2 is a lot smaller than P50, increasing the partial pressure of oxygen will linearly increase the fraction of Mb bound. Double the partial pressure of oxygen? Double the fraction of Mb bound. Easy-peasy. Then, when the partial pressure of oxygen equals P50, half of the Mb is bound. Since that’s the very definition of P50, that’s not too tricky. Finally, when your partial pressures of oxygen get a lot larger than P50, Y approaches pO2 / pO2, and the fraction of Mb bound is essentially 1. Since the partial pressure of oxygen in our tissues is way above 2 Torr, myoglobin is essentially 100% saturated in our tissues. Cool, huh?

Okay, okay, that’s all well and good, but what about hemoglobin? That’s the main player, right?

Right you are. Let’s take a look at it, shall we?

Unlike myoglobin, hemoglobin (Hb) is made up of four pieces called subunits: two alpha subunits, and two beta subunits. This makes it a tetramer. However, it’s more accurate to say that each alpha piece forms a dimer with a beta piece, and then both alpha-beta pieces combine to form hemoglobin. Therefore, it’s often described as a “dimer of dimers.” Since the alpha and beta subunits of hemoglobin are both incredibly similar to myoglobin, Hb can be said to be made up of four Mb-like structures. Since, like myoglobin, each subunit contains a heme, a single hemoglobin protein contains four hemes and can bind up to four molecules of oxygen. The subunits are held together by noncovalent forces—oppositely charged groups on different subunits interact to form salt bridges, hydrogen bonds form between H-bond acceptors and H-bond donors on different units, and hydrophobic pieces interact with other hydrophobic pieces to stabilize the whole arrangement.

We just talked about the binding of oxygen to myoglobin. Would you expect the same kind of thing to work for hemoglobin? If you’re like me, you would—after all, each little piece is basically a myoglobin protein, right? Turns out, though, that it’s even better than that. The binding of oxygen to hemoglobin is not only dependent upon the amount of oxygen the protein has available, like with myoglobin, but also dependent on whether other subunits have bound oxygen already.

This is called “cooperativity,” and it’s what makes hemoglobin so special. To explore this idea a little better, let’s look at an equation for the binding of oxygen to Hb based on partial pressures of oxygen. That equation looks like this:

Y = pO2n/ [pO2n+ P50n] where P50 for hemoglobin is 26 Torr.

You’ll notice that this equation looks almost like the one for Mb, except now we have those suspicious-looking exponents. Those coefficients mean that the equation for the binding of Hb is sigmoidal, not hyperbolic, when n > 1. They also mean that, at low pO2, increasing partial pressures of oxygen doesn’t linearly increase the binding of hemoglobin.

Okay, but what does that even mean? Why are those coefficients there?

The coefficients are called “Hill coefficients,” and they describe the degree of cooperativity between the subunits of hemoglobin. When n = 1, the four subunits behave like four independent Mb units, and the curve is hyperbolic (like myoglobin’s). When n > 1, though, the binding of oxygen to one subunit increases the ability of the other subunits to bind another oxygen. The maximum value of the Hill coefficient is the number of binding sites in the protein—in hemoglobin’s case, that’s four.

What is the actual value of n? Good question. According to experimental data, the value of n for hemoglobin is 3.3, which means that hemoglobin displays nearly-perfect cooperativity. What that means practically is that, each time an oxygen molecule binds to hemoglobin, it betters the ability of the protein to bind the next molecule of oxygen.

Why is that? Well, you remember that, in myoglobin, the iron is pulled back into the plane of the heme when it binds oxygen? That happens in hemoglobin, too. The difference is that, in hemoglobin, the other subunits are also helping keep the iron pulled out of the plane when it’s unbound. The binding of oxygen to the first heme in the first subunit, therefore, is relatively unfavorable (this is said to be the “T-state”, or “tense-state”). When the first oxygen binds to the first subunit of Hb, it tugs on that Fe2+, which in turn tugs on the proximal histidine coordinated with it, which then tugs on the helices the histidine is attached to. This weakens the interactions between the subunits, making it easier for the next oxygen to bind to the next heme. By the time three hemes are bound, the fourth is pumped up and just begging for an oxygen molecule (“R-state” or “relaxed-state”). Wikipedia user Habj made an awesome gif that illustrates the T-state versus the R-state:

This is really cool, right?

So, hemoglobin does a cool thing. But why? What’s useful about the binding of oxygen being dependent on something other than oxygen concentration?

Well, let’s think about it this way. In the arteries close to the lungs, oxygen tension approaches 100 Torr, which is way higher than the P50 of 26 Torr for hemoglobin. That means that, in the arteries, the amount of oxygen bound to hemoglobin is at a maximum. Once you move to peripheral tissues, however, oxygen tension quickly drops to 40 Torr, assuming you’re resting. Due to cooperativity, despite the fact that oxygen tension between the lungs and peripheral tissues decreases by 60 Torr, only 20% of hemoglobin’s oxygen gets dropped off!

In those same tissues, when you start exercising, the oxygen tension drops to somewhere around 20 Torr. Since the slope of the oxygen binding curve is equal to the Hill constant around P50 (20-30 Torr), oxygen binding linearly decreases in this range. All of this fancy math means that, between 40 Torr and 20 Torr, with only a 20 Torr drop in oxygen pressure, 70% of the oxygen in hemoglobin gets released!

That’s the usefulness of cooperativity. When hemoglobin moves from the lungs to resting tissues, which don’t necessarily have a high oxygen demand, it holds on to most of its oxygen even though it experiences a big drop in oxygen pressure. However, the small drop from resting to exercising oxygen pressures within tissues, an indication that rigorous respiration is taking place, is enough to prompt it to offload plenty of oxygen for the respiring tissues while still retaining enough (30%) for emergencies. This ability to drop oxygen only where it’s needed is a feat that myoglobin, with its single, independent unit, can’t achieve.

It doesn’t stop there, either. The Bohr effect relates the concentration of CO2 or protons in tissues to hemoglobin’s ability to bind oxygen. Turns out, when oxygen binds to hemoglobin, it releases protons. That means that, when there are a lot of protons in a solution, the equilibrium is shifted toward unbound hemoglobin, meaning the T-state becomes favored. The same happens with high levels of CO2 for two reasons: firstly, carbon dioxide reacts with water to make carbonic acid, which releases protons and acidifies the environment, and secondly, CO2 covalently bonds to the amino end of hemoglobin and produces both protons and a carbamate anion that stabilizes the T-state. In either case, acidic environments with excess CO2 indicate respiration is taking place, and more oxygen than normal is released for respiring tissues. In the case of CO2, the hemoglobin also helps transport CO2 and protons to the lungs, where they’ll be eliminated.

(Please, just stop and give hemoglobin a round of applause. It’s so cool!)

There’s also a neat little molecule that affects the P50 of hemoglobin, called 2,3-bisphosphoglycerate, which is made by red blood cells. It binds to positive surfaces on the beta subunits of deoxy-Hb, which stabilizes the T-state and raises the P50 of hemoglobin. This ensures that more oxygen is made available to tissues at lower oxygen partial pressures. Our bodies use this to help us adjust to higher elevations, where oxygen is less available. When a human goes to a high altitude, over a period of days or even weeks, their RBCs will produce more 2,3-BPG, tweaking their hemoglobin so that it makes oxygen more available to their tissues. (Neat, right?)

Okay. We’ve come this far, and I know what you might be thinking: since we’re talking about hemoglobin, what’s an important disease that we should talk about? Sickle cell anemia, perhaps? Wouldn’t you like to know what causes it?

Oh, come on, you want to know. The answer’s really simple. It’s caused by a single mutation in the beta subunit of hemoglobin that changes a glutamine to a valine. The glutamine, a hydrophilic residue, is situated near water, and in normal hemoglobin, it’s happy, and everything’s great. However, when you substitute it for valine, which is hydrophobic, the valine doesn’t want to be near water, and instead it seeks out a hydrophobic environment. Its solution is ultimately to bury itself in a hydrophobic pocket on another tetramer. In the end, you get long chains of hemoglobin that aggregate, forcing red blood cells to conform into their characteristic spindle shape.

Phew! Well, I’m thoroughly exhausted! I think I’m going to take a break now. Eat something, maybe. Take some deep breaths so that my oxygen partial pressures will go up a little bit.

Stay tuned in for a shift in topics. Now that we’re done talking about the way your body transports oxygen, we’re going to shift gears and look at how it combats viruses—and sometimes ends up killing itself in the crossfire.

You know what to do.